Hey 🙋♂️, Welcome To my brand new blog about Kubernetes here you will learn about Introduction to Kubernetes.

The most interesting topic while learning DevOps.

Prerequisite to learn Kubernetes

- Linux basics

- Docker or any containerization tool

- A high-level overview of what is monolithic and microservice architecture.

You must be familiar with these before starting Kubernetes.

Container Orchestration Engine

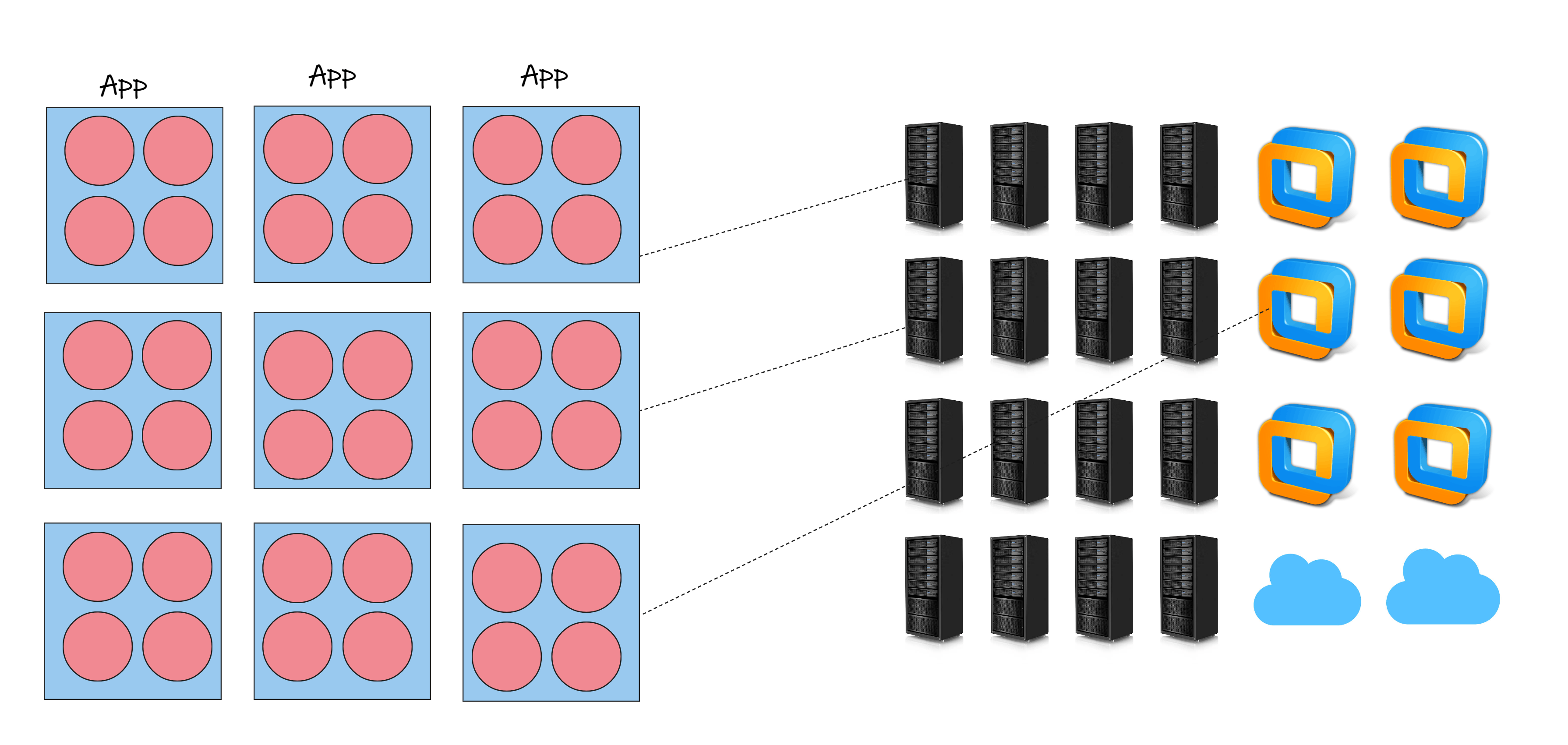

Let's assume we are working in a mid-size to large scale company they have hundreds of apps which is made up of thousands of microservices so deploying and managing all these application manually is almost impossible. and here we need Container Orchestration Engine.

Problem in deploying and managing the application without Container Orchestration Engine.

Imagine in our company we have 100s of app which contains large number of microservices and we have a big IT infrastructure which consists of physical servers, VMs and could VM instances.

Since we are talking about deploying and managing microservices we need to talk about containers, because majority of time these microservices are running inside the containers and mostly in docker containers.

There are two problems if we are using docker container itself without the help of container orchestration engine, they are clustering and scalability.

Clustering :- If we are using docker engine by itself without docker swarm mode then we are limited to managing these apps on single host. Since there is no clustering of servers, if the server fails then our app comes down and that is one major problem.

Scalability :- Let's Imagine that our company just released a product online fortunately there is great demand of product more than initial expectation 🥳🥳. But the unfortunate thing here is 😟😟 backend web application, which is overrun with traffic from outside world and it is on the verge of break and now it's time to scale up and it will take time. And we are not sure will the application will be live till then. So scaling up and down with just a docker engine is not easy, and we need a tool for that, and that tool is called as Container Orchestration Engine. (COE)

Container Orchestration Engine (COE) is a tool to automates deploying, scaling, and managing containerized applications at a large scale in dynamic environment.

There are many COE tools are available some of the popular ones are Kubernetes, Docker Swarm, and Apache missiles.

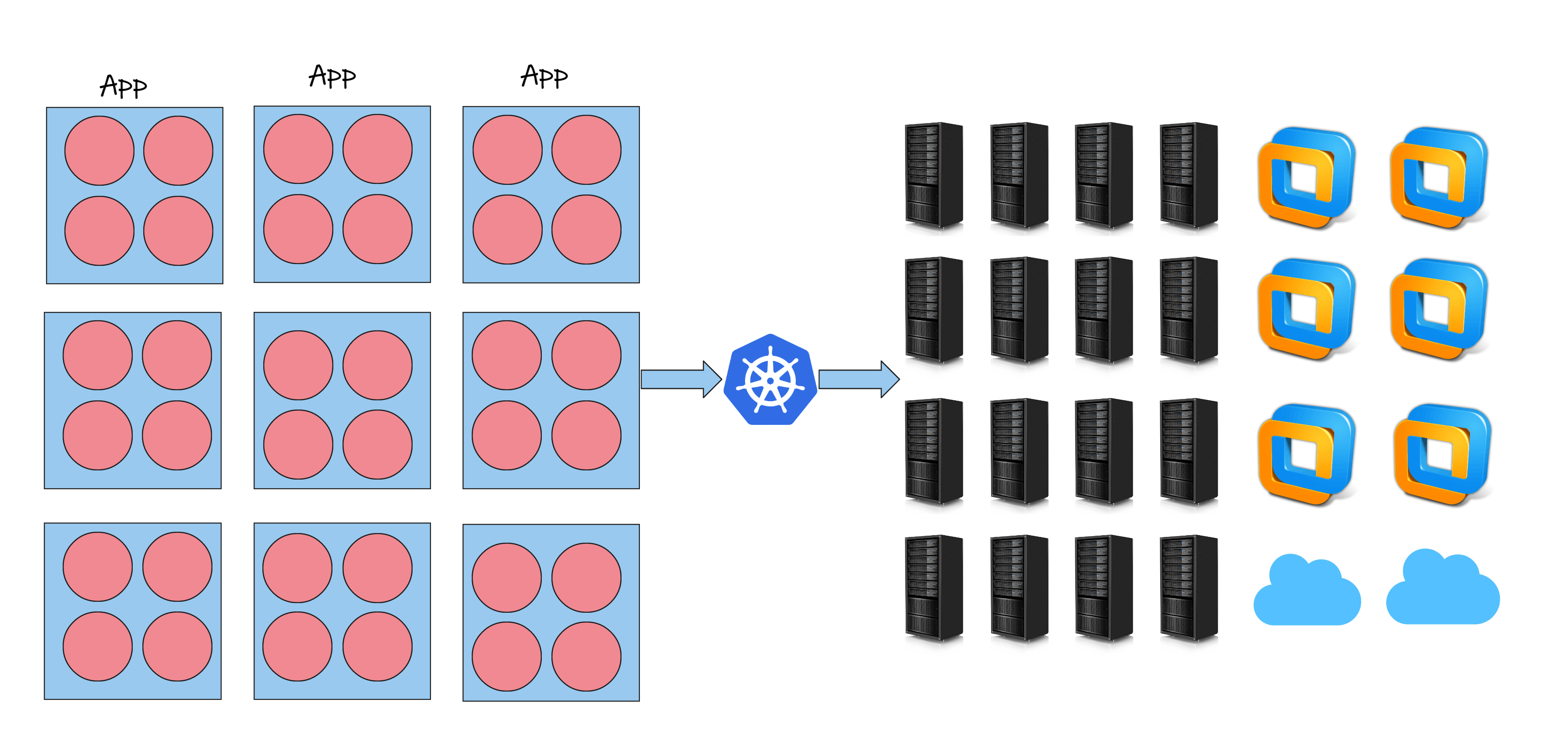

Let's get back to our diagram that we saw above where we have bunch of apps and large IT infrastructure on other side but this time we also have a Container Orchestration Engine in Middle.

There are two common primary things that any container orchestration engine performs that is Clustering and scalability.

First, let's look at the clustering, the way clustering forms in most of the tools are:-

We have a master server with the orchestration engine is installed and configured. Then we join the worker node together along with master node thus form the cluster of nodes

Here master server acts as a cluster manager, which manages the worker nodes in the cluster.

So, by performing the clustering, it opens to a lot of opportunities, primarily fault tolerance and scalability.

Next common feature is Scheduling as the name indicates we schedule something here, that is containerized apps on the worker nodes.

Imagine that we are about to deploy an app and the requirement here is to deploy apps on specific nodes which has SSD drives. And thanks to container Orchestration Engine with the help of that we can deploy the apps onto the nodes which has SSD drives. All we have to do here is define the requirement in a config file and submit it to the container orchestration engine. Then it is responsibility of orchestration engine to find the nodes which matches the requirement and schedule the app accordingly.

At very high level Clustering and scheduling are two primary features of container orchestration engine. Let's discuss some other features COE provides.

Features of Container Orchestration Engine.

- Clustering :- We already discussed about this in detail above.

- Scheduling :- We already discussed about this in detail above.

- Scalability :- Scalability is a way of increasing and decreasing the number of application instance when the traffic demand from outside world goes up and down accordingly. The same apply to the cluster where we can scale up or down that cluster by adding or removing additional worker node from the cluster. So with the help of container orchestration engine, we can scale up and don the application instance and the node instances dynamically as per the traffic demand.

- Load Balancing :- Imagine that we have a multiple instances of our app running on multiple worker nodes, Then container orchestration engine distributes that traffic equally across all application instance accordingly. It makes sure that no one specific worker node gets all the heat at the same time. So that's the responsibility of load balancer.

- Fault tolerance :- As we know our containerized apps runs inside the containers and these containers runs on top of worker nodes. So what is the container goes down or the worker node itself goes down. And this is where the fault tolerance features comes in. Typically there will be a monitoring process running on the worker node all the time, and this monitors the containers and worker nodes health status and submit it to the master node. If the container engine finds out the the container is failed or stopped for any reason then it will recreate the container on same healthy node. In case the COE finds out the worker node inside the cluster is not responding Then it will reprovision the containers from the failed node to a healthy node inside the cluster.

- Deployment :- COE offers different ways to deploy apps. Imagine that we have a version1 of Application "A" which is already deployed and running in production and currently many users are using this application. Now we have requirement to upgrade the version from version1 to version2 of application "A". Since application is already in use now we have to figure out which type of deployment method we need to use. Some of the scenarios are Completely remove the V1 and deploy the V2 so there will be a downtime in between and this method is called as Recreate. In case we don't need a downtime then we need to choose a different methodology, which can be a rolling update method where we will slowly replacing V1 with V2 so with the help of container orchestration engine we have that flexibility to choose different methods of deployment. And that's about the deployment in short.

What is Kubernetes

Kubernetes is an open source container orchestration engine to manage containers at a large scale.

Kubernetes will provide all the features needed to run "Docker" or any container based application, including custom management, scheduling, service discovery, monitoring, secret management and more

History

Kubernetes is born and designed at Google and later on Google donated Kubernetes to Cloud native Computing Foundation (CNCF).

Since then Kubernetes is governed and maintain by CNCF

Since then Kubernetes is governed and maintain by CNCF

History of Kubernetes at Google

Containers are not new for google They have been using this container since more than a decade. Google runs about more than two billion containers every week. Google runs it's core product such as Gmail, YouTube and Google search inside containers.

Kubernetes was released in 2014 so How google is managing these containers more than a decade ago?? 🤔🤔 ----> That's with their secret proprietary tool, and that tool is called borg. World got to know about borg only after Kubernetes is released so they have been using borg to create cluster of servers then deployed containerized apps and scale it as per the demand.

So when google saw the emerging container technology after Docker released it's first version of Docker container engine back in 2014,during that time internally at google, a couple of engineers along with Joe Joe Beda, Brendan Burns, and Craig McLuckie, thought of coming up with a new tool from the lessons learned from the past. And they are more than a decade of experience in the containers space and that tool is now called as Kubernetes.

Borg and Kubernetes might be similar in terms of futures but Kubernetes was designed and developed from ground up, just like any other brand new tool. The code of borg and Kubernetes is completely different. we can think Kubernetes as a slimmed down version of borg.

Currently Kubernetes is default container orchestration engine on google cloud.

Google announced capabilities to the outside world by the end of 2014, but the actual Kubernetes V1 was released in mid 2015

- in the same time google donated Kubernetes to CNCF.

- CNCF offers the official certification in Kubernetes.

- As of this time when I am writing this blog Latest stable Kubernetes release is 1.24.

Sometimes Kubernetes is also called as k8s it is just the short form of Kubernetes where k is first letter and s is last letter of Kubernetes and 8 refers to 8 character between them.

That's it about this small blog A full fledged Kubernetes Tutorial blog is in the way so Stay updated and KEEP LEARNING

Thank You for reading this blog 😊😊